- Total News Sources

- 5

- Left

- 4

- Center

- 0

- Right

- 1

- Unrated

- 0

- Last Updated

- 21 days ago

- Bias Distribution

- 80% Left

OpenAI Tightens Sora 2 After Deepfake Findings

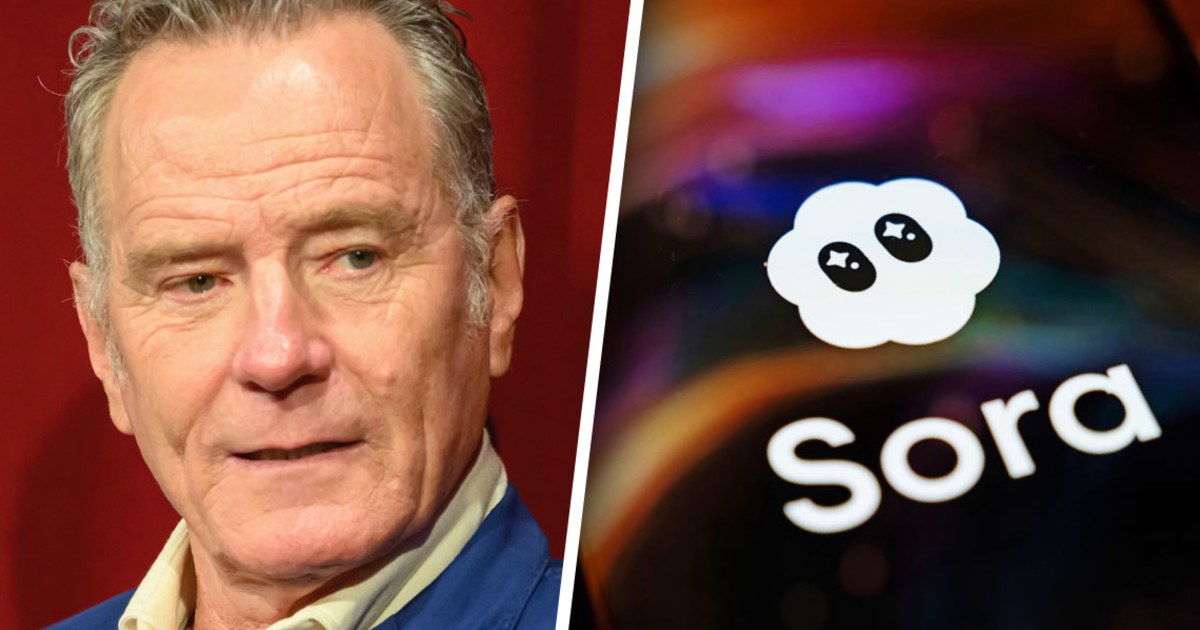

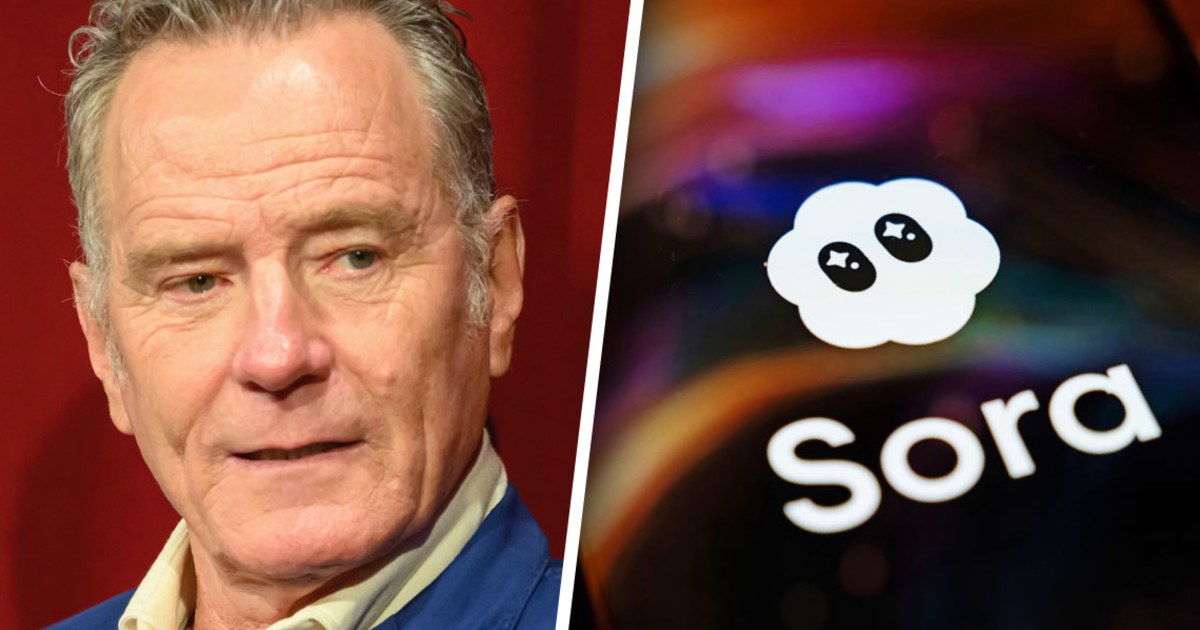

A NewsGuard analysis found OpenAI’s Sora 2 generated realistic false videos in about 80% of tested prompts and used watermarks that can be easily removed, making quick, convincing misinformation — including fabrications tied to prior Russian disinformation — simple to produce. The model’s launch produced a cascade of troubling deepfakes, including disrespectful portrayals of Martin Luther King Jr. and an unauthorized clip of Bryan Cranston, prompting public outcry from rights holders and family members. Cranston raised the issue with SAG‑AFTRA, and OpenAI issued apologies and worked with SAG‑AFTRA, CAA, UTA and other talent organizations to strengthen guardrails, tighten restrictions on recreating voices and likenesses, and require opt‑in consent via a cameo feature. OpenAI says the problematic generations were unintentional, has committed to improving enforcement and responding quickly to complaints, and is working with Hollywood on protections and consent mechanisms. The episode also exposed weaponized political deepfakes — exemplified by a circulated AI video of Donald Trump dropping feces on protesters — and prompted musicians such as Kenny Loggins to demand removal of unauthorized uses, highlighting broader legal and ethical disputes over consent, copyright and reputation in generative video technology.

- Total News Sources

- 5

- Left

- 4

- Center

- 0

- Right

- 1

- Unrated

- 0

- Last Updated

- 21 days ago

- Bias Distribution

- 80% Left

Related Topics

Stay in the know

Get the latest news, exclusive insights, and curated content delivered straight to your inbox.

Gift Subscriptions

The perfect gift for understanding

news from all angles.