- Total News Sources

- 12

- Left

- 8

- Center

- 0

- Right

- 0

- Unrated

- 4

- Last Updated

- 480 days ago

- Bias Distribution

- 100% Left

Experts Criticize Whisper's Reliability in Transcriptions

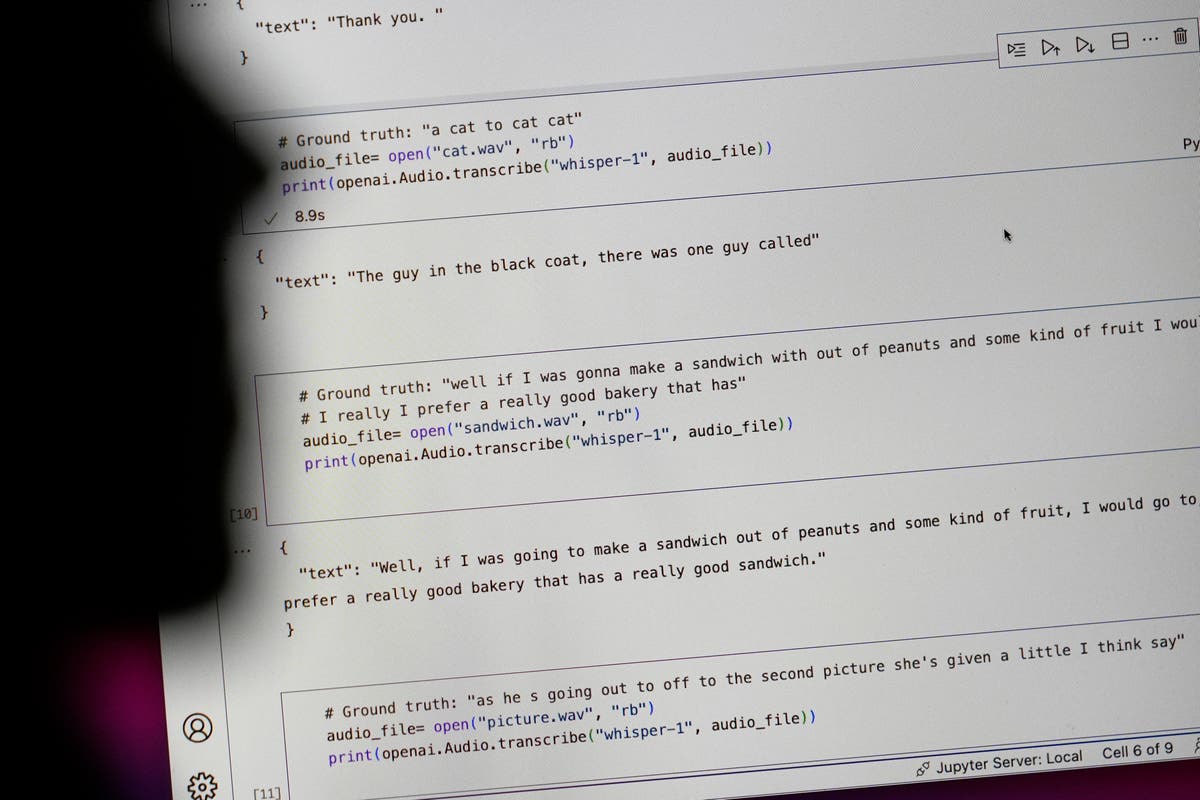

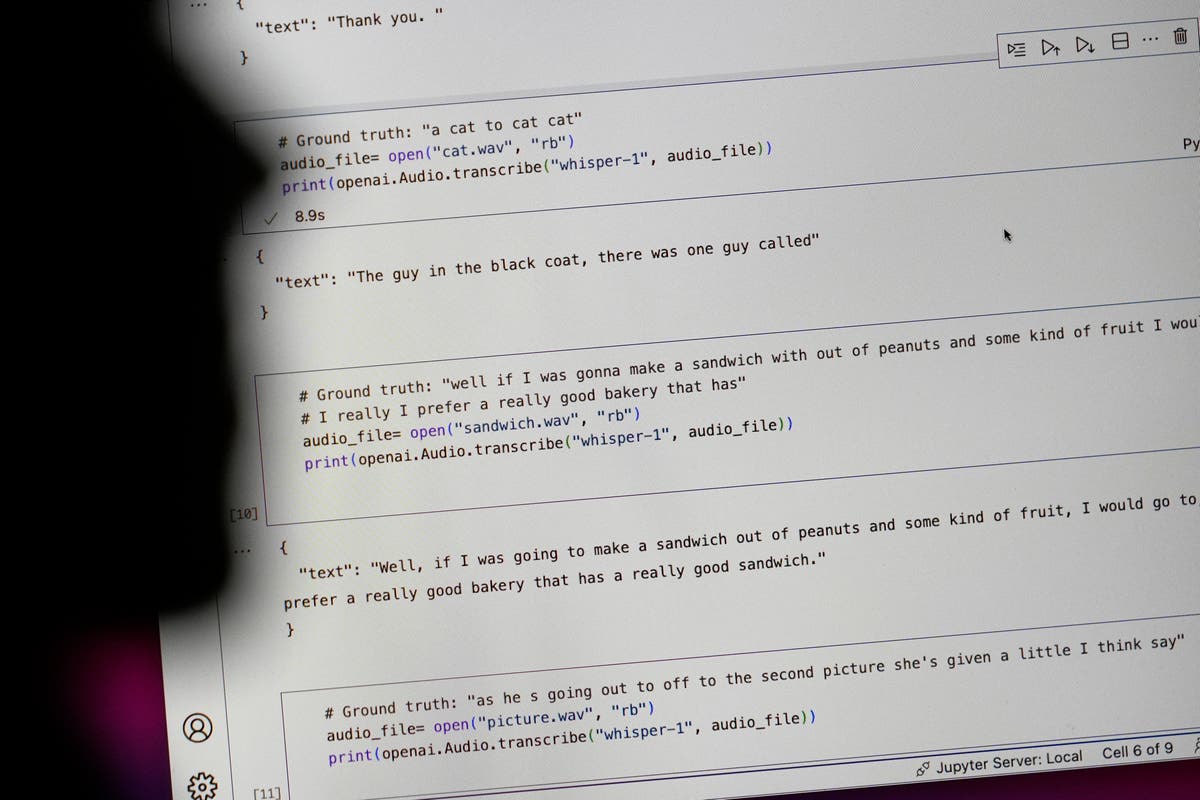

OpenAI's transcription tool, Whisper, which is widely used across various industries including healthcare, is reported to generate 'hallucinations'—invented text that can include racial commentary, violent rhetoric, and fabricated medical information. These hallucinations have been identified in numerous studies, with instances of false information appearing in a significant percentage of transcriptions. Despite OpenAI's warnings against using Whisper in 'high-risk domains,' such as medical settings, many healthcare providers continue to employ it for transcribing patient consultations. The issue of hallucinations persists across both long and short audio samples, raising concerns about the potential consequences of relying on Whisper for accurate transcription. Studies have shown hallucinations in a substantial portion of examined transcripts, highlighting the need for improvements to the tool to mitigate these errors. Researchers emphasize that while Whisper has been integrated into platforms like Oracle and Microsoft's cloud services, its current reliability remains questionable.

- Total News Sources

- 12

- Left

- 8

- Center

- 0

- Right

- 0

- Unrated

- 4

- Last Updated

- 480 days ago

- Bias Distribution

- 100% Left

Related Topics

Stay in the know

Get the latest news, exclusive insights, and curated content delivered straight to your inbox.

Gift Subscriptions

The perfect gift for understanding

news from all angles.